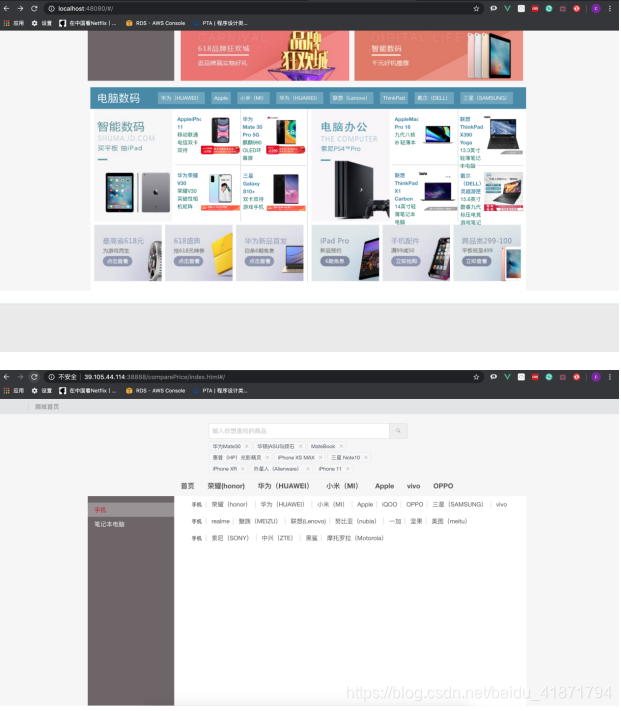

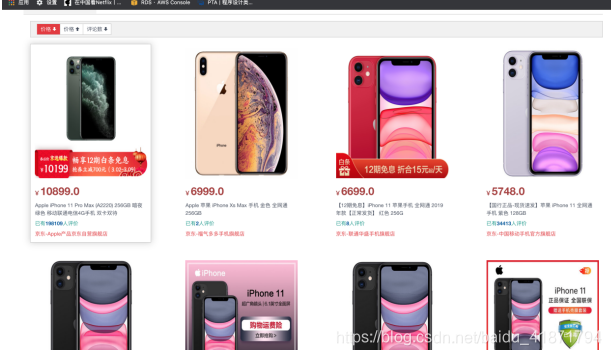

爬取京东苏宁商品信息(手机 笔记本电脑) 以及商品的评论 然后集成到web上,实现了价格评价的比较 并且对每件商品评论进行了情感分析,绘制了评论的词云

https://github.com/ccclll777/JDSNCompare http://39.105.44.114:38888/comparePrice/index.html

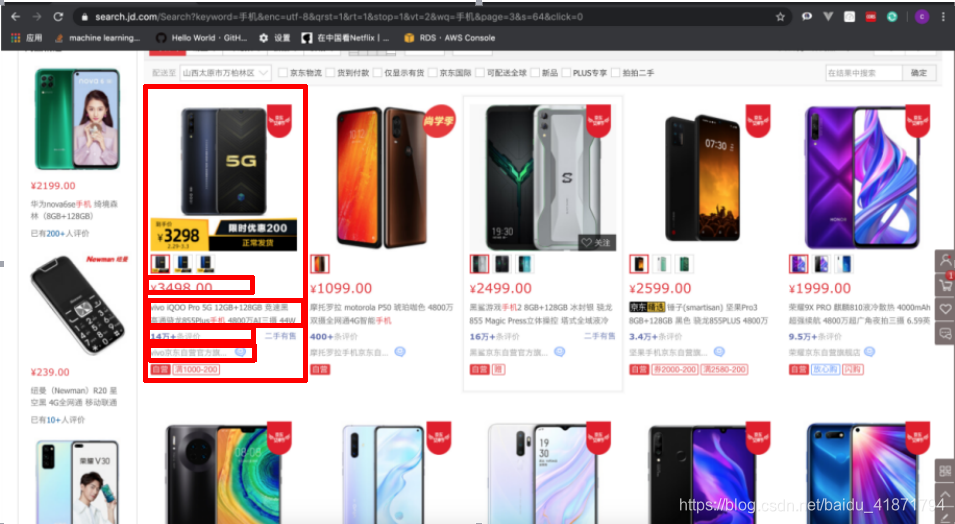

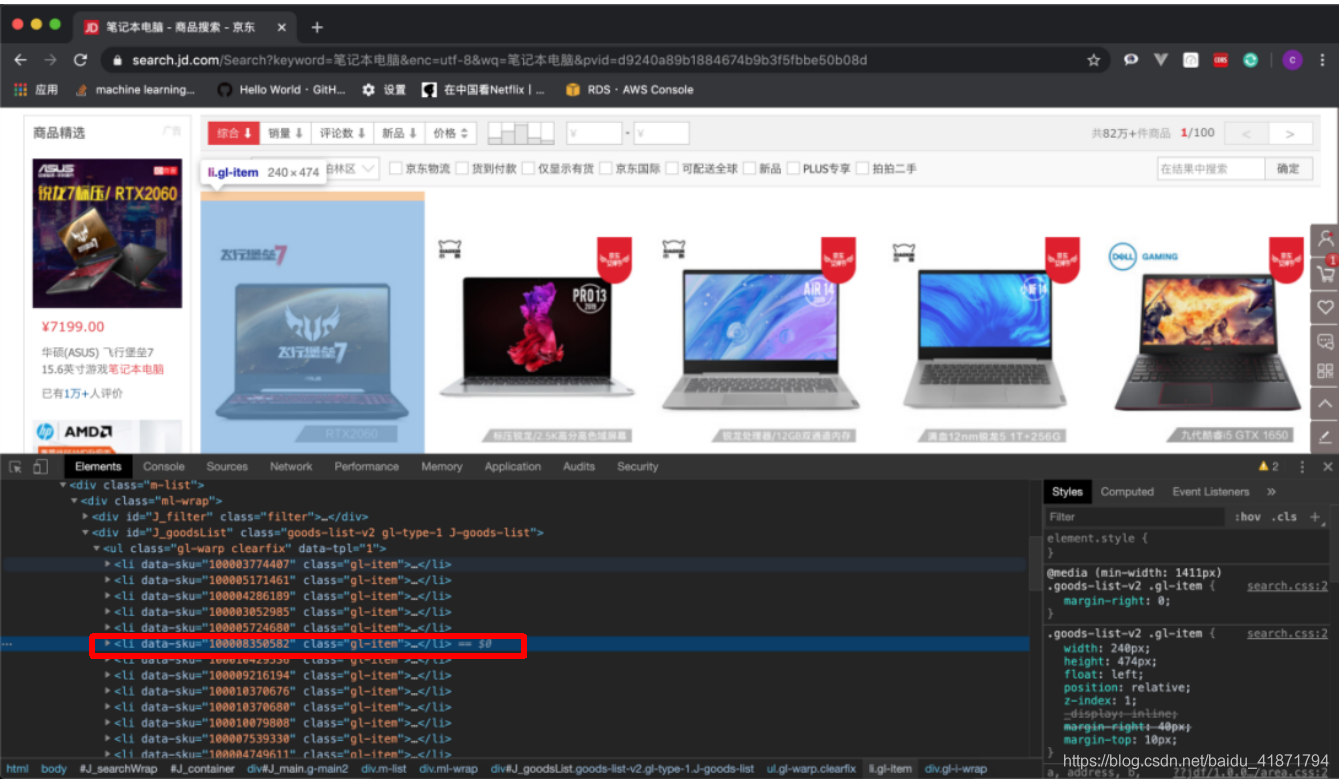

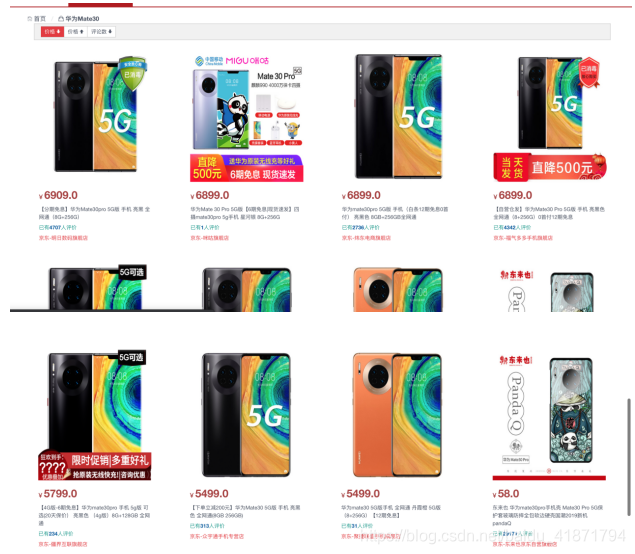

在这里,我爬取了京东的搜索界面,通过关键字“手机”,“和笔记本电脑”,搜索到的信息,目标站点的url为

https://search.jd.com/Search?keyword=手机&enc=utf-8&wq=手机&pvid=15b7aa9229e8404c8c157db86b8563d8 https://search.jd.com/Searchkeyword=笔记本电脑&enc=utf-8&wq=bi ji ben&pvid=65b38d2ff9634a078f7d7d3825a39b5d

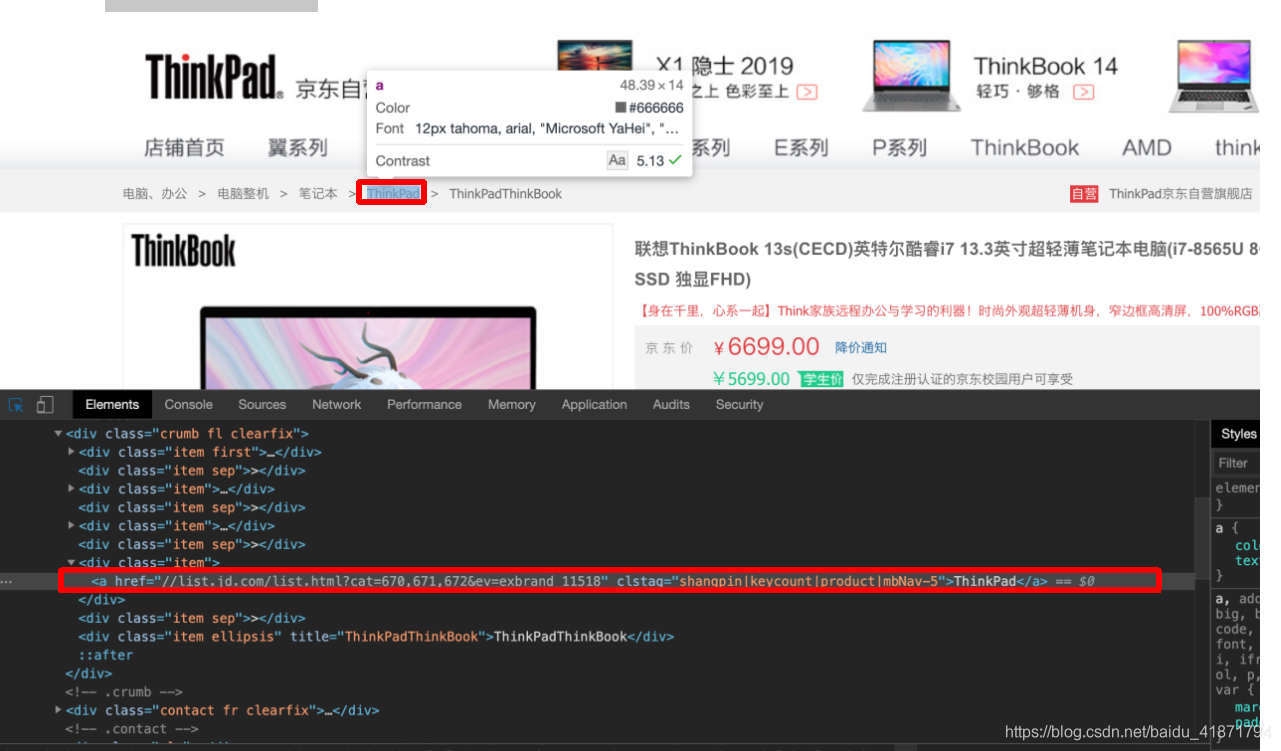

【1】京东网站界面分析

经过总结之后,发现两者的共同点,可以根据任意参数搜索信息。

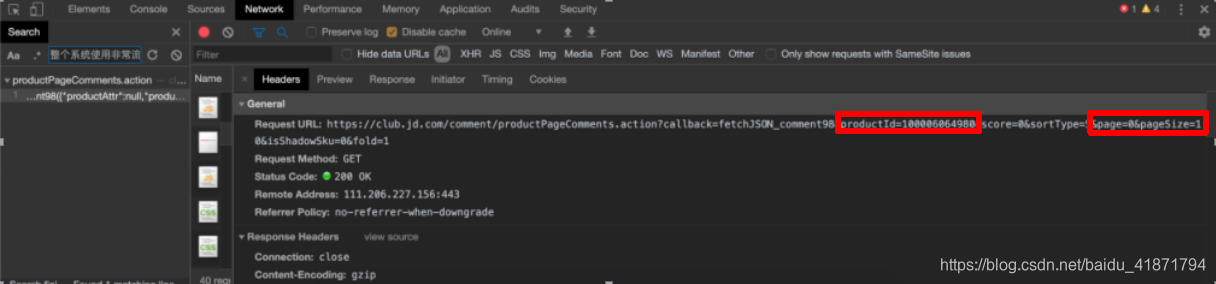

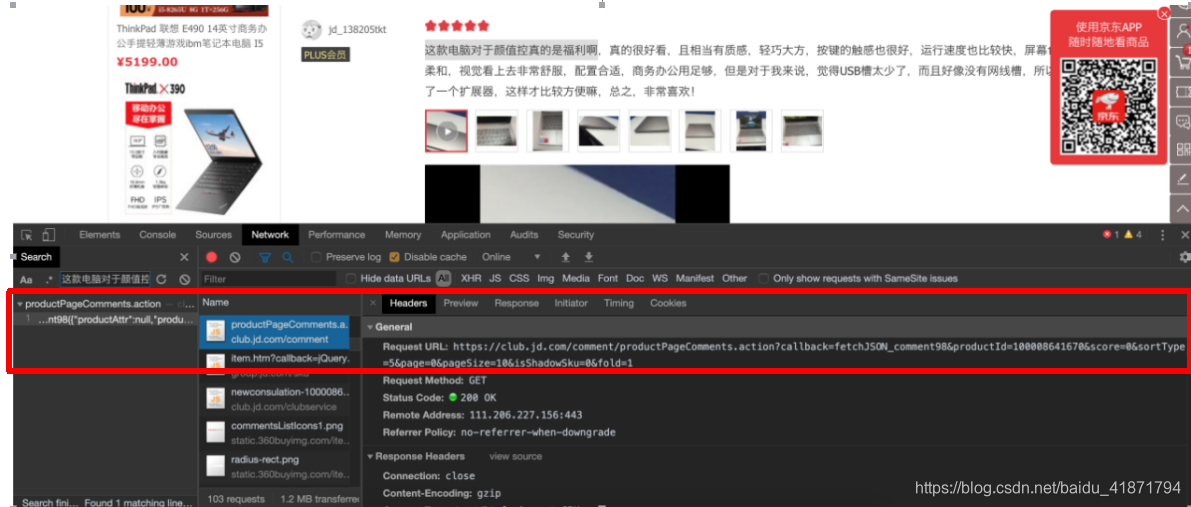

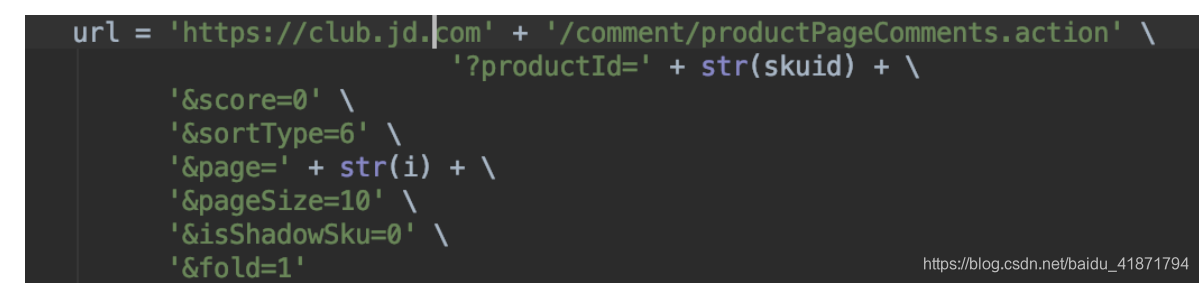

https://club.jd.com/comment/productPageComments.action?productId={}&score=0&sortType=5&page={}&pageSize=10&isShadowSku=0&fold=1

【2】操作过程

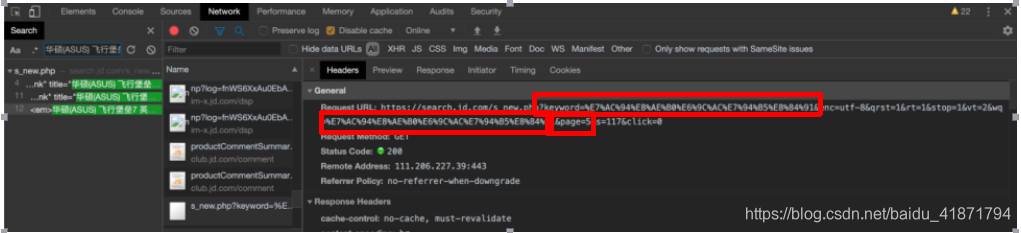

https://search.jd.com/Search?keyword={}&enc=utf-8&qrst=1&rt=1&stop=1&vt=2&wq={}&page={}&s=51&click=0

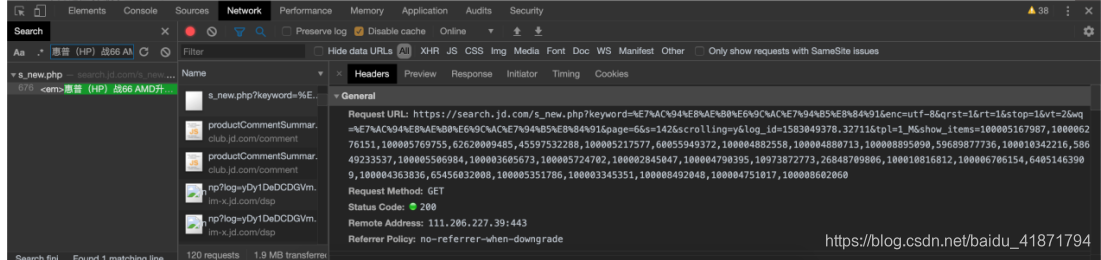

通过向下翻页,发现页面下面的内容是异步加载出来的

https://search.jd.com/s_new.php?keyword=%s&enc=utf-8&qrst=1&rt=1&stop=1&vt=2&wq=%s&page=%d&s=76&scrolling=y&log_id=1582951658.11616&tpl=1_M&show_items=%s

商品详情的url:

.gl-warp .gl-item .gl-i-wrap .p-name a::attr(href)

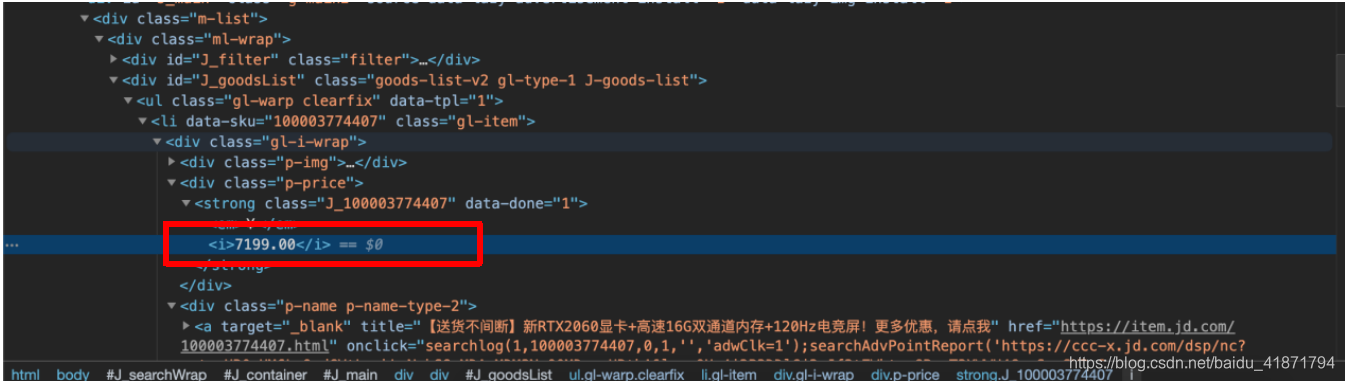

商品价格

.gl-warp .gl-item .gl-i-wrap .p-price strong i::text

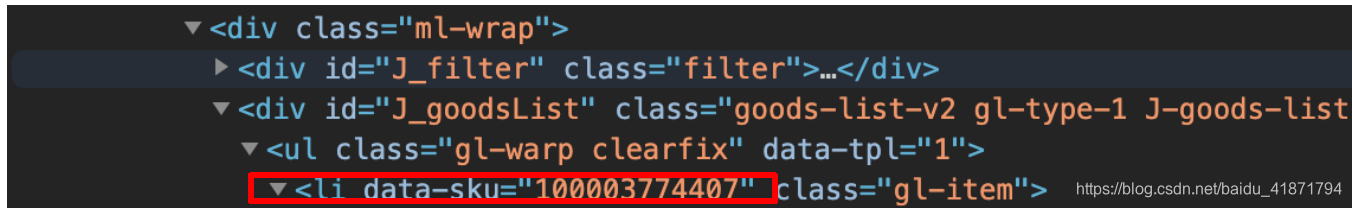

商品的id 即data_sku中的信息

.gl-warp .gl-item::attr(data-sku)

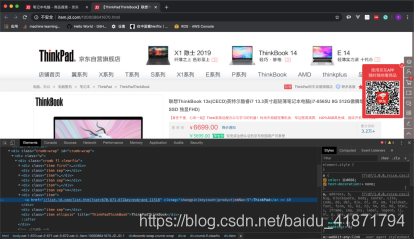

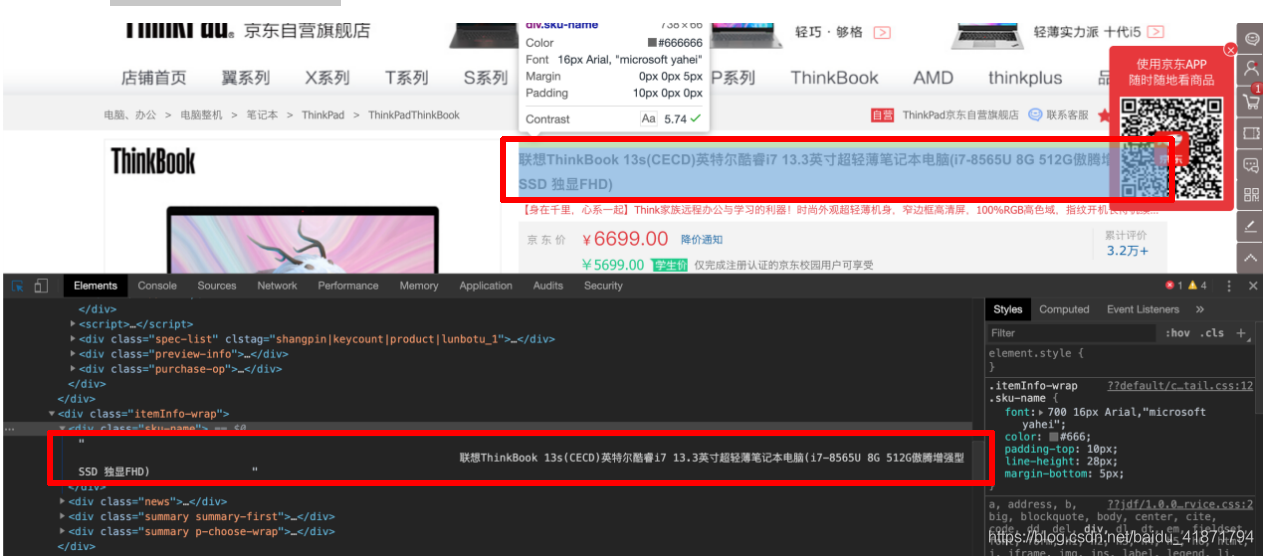

(2)商品的详情界面

.inner .border .head a::text

.item .ellipsis::text

.J-hove-wrap .EDropdown .fr .item .name a::text

根据dom树的位置,通过class选择器层层选择,

.w .product-intro .itemInfo-wrap #spec-img::attr(alt)

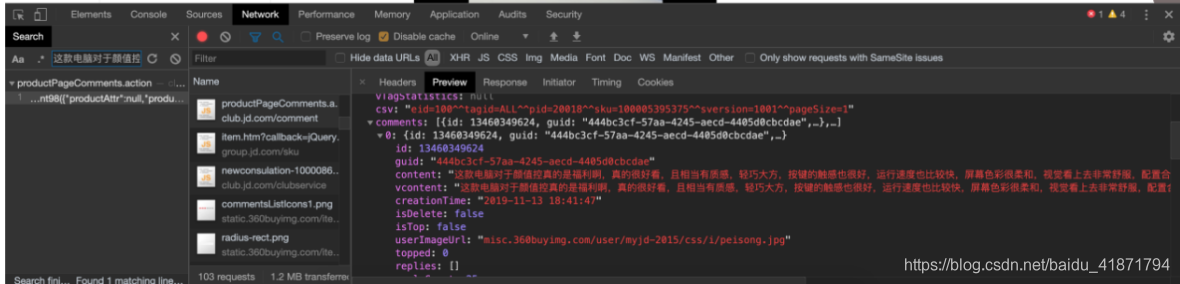

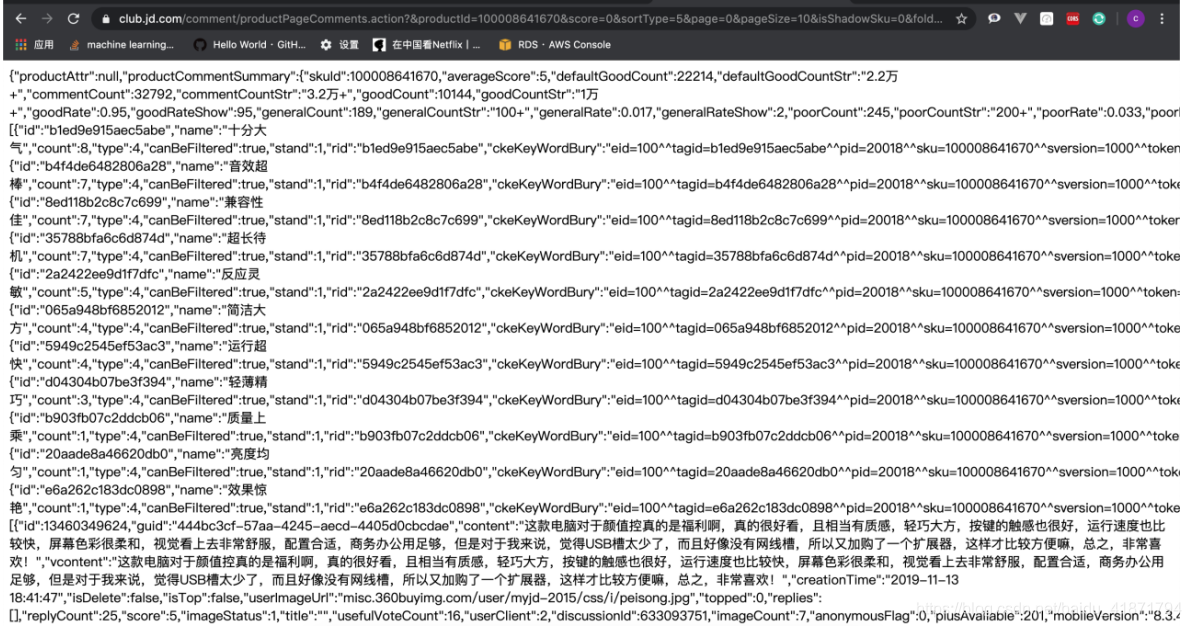

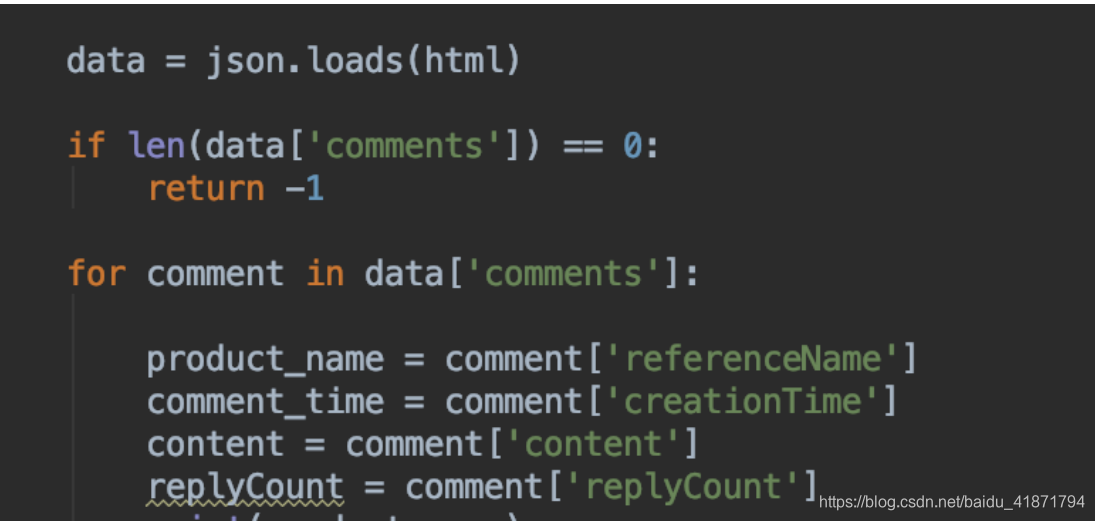

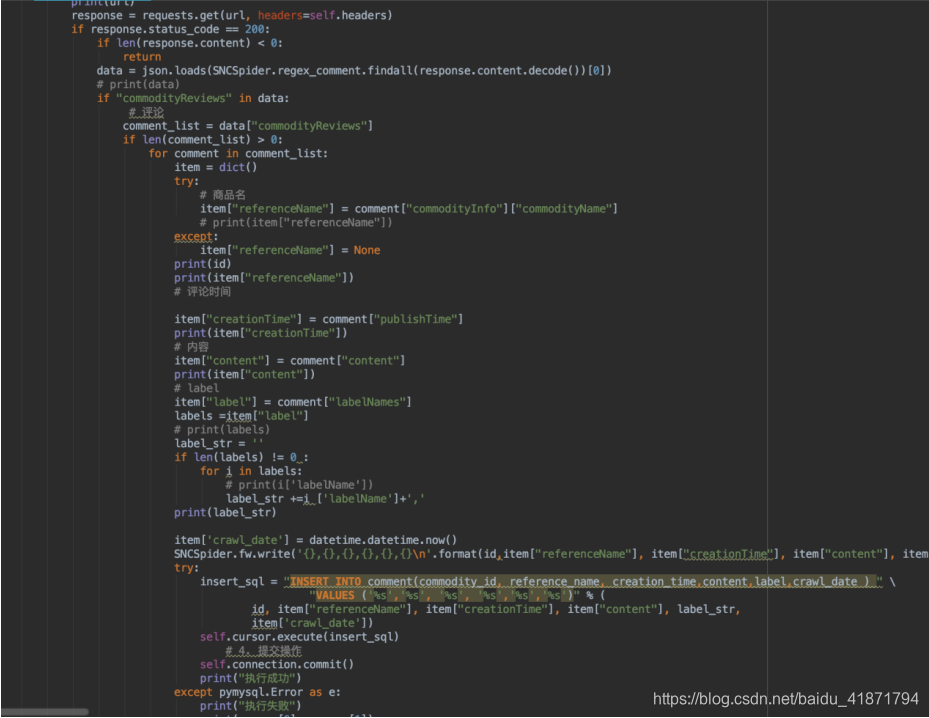

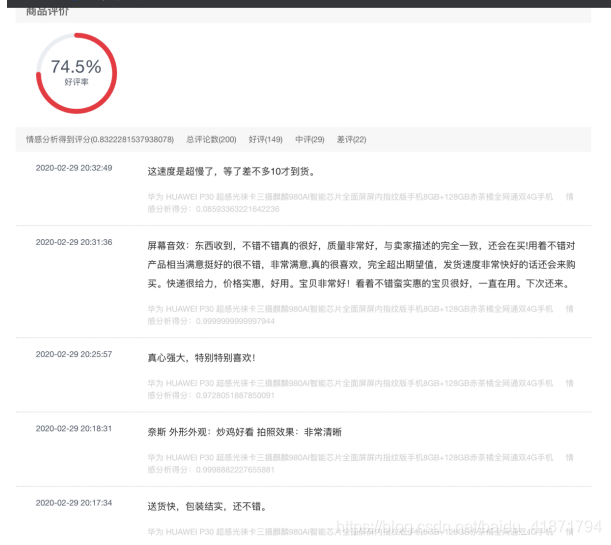

(3)商品评价

skuid为商品的id page为页数

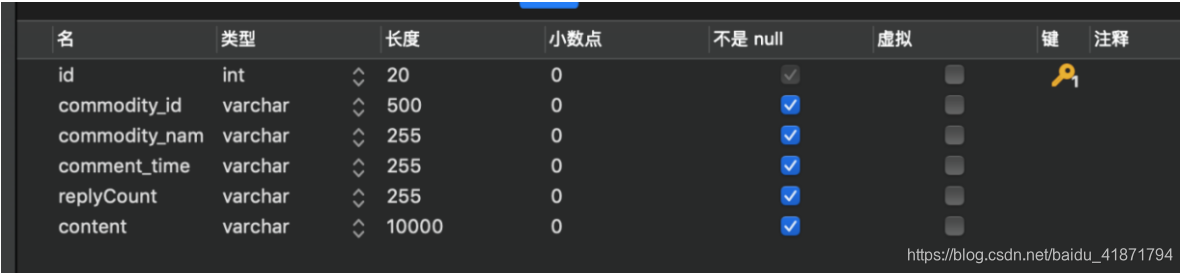

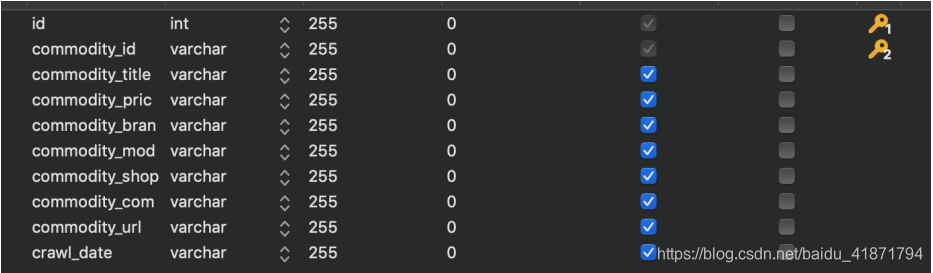

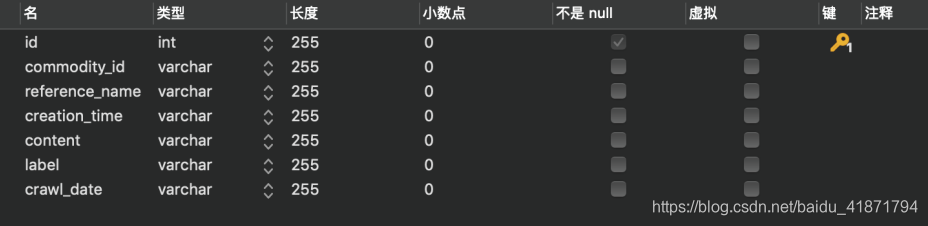

【3】数据库表的设计

【4】代码实现https://github.com/ccclll777/JDSNCompare

import jsonimport osimport pickleimport reimport randomimport timeimport requestsfrom bs4 import BeautifulSoupclass Assistant (object def __init__ (self ): self.username = '' self.nick_name = '' self.is_login = False self.User_Agent = "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.181 Safari/537.36" self.headers = { 'User-Agent' : self.User_Agent } self.sess = requests.session() self.item_cat = dict () self.item_vender_ids = dict () self.item_states = dict () self.risk_control = '' try : self.load_cookies() except Exception: pass def load_cookies (self ): cookies_file = '' for name in os.listdir('./cookies' ): if name.endswith('.cookies' ): cookies_file = './cookies/{0}' .format (name) break with open (cookies_file, 'rb' ) as f: local_cookies = pickle.load(f) self.sess.cookies.update(local_cookies) self.is_login = self._validate_cookies() def _validate_cookies (self ): url = 'https://order.jd.com/center/list.action' payload = { 'rid' : str (int (time.time() * 1000 )), } try : resp = self.sess.get(url=url, params=payload, allow_redirects=False ) if resp.status_code == requests.codes.OK: return True except Exception as e: print(e) self.sess = requests.session() return False def _save_cookies (self ): cookies_file = './cookies/{0}.cookies' .format (self.nick_name) directory = os.path.dirname(cookies_file) if not os.path.exists(directory): os.makedirs(directory) with open (cookies_file, 'wb' ) as f: pickle.dump(self.sess.cookies, f) def _get_login_page (self ): url = "https://passport.jd.com/new/login.aspx" page = self.sess.get(url, headers=self.headers) return page def _get_QRcode (self ): url = 'https://qr.m.jd.com/show' payload = { 'appid' : 133 , 'size' : 147 , 't' : str (int (time.time() * 1000 )), } headers = { 'User-Agent' : self.User_Agent, 'Referer' : 'https://passport.jd.com/new/login.aspx' , } resp = self.sess.get(url=url, headers=headers, params=payload) if resp.status_code !=requests.codes.OK: print('获取二维码失败' ) return False QRCode_file = 'QRcode.png' with open (QRCode_file, 'wb' ) as f: for chunk in resp.iter_content(chunk_size=1024 ): f.write(chunk) print('二维码获取成功,请打开京东APP扫描' ) if os.name == "nt" : os.system('start ' + QRCode_file) else : if os.uname()[0 ] == "Linux" : os.system("eog " + QRCode_file) else : os.system("open " + QRCode_file) return True def login_by_QRcode (self ): """二维码登陆 """ if self.is_login: print('登录成功' ) return True self._get_login_page() if not self._get_QRcode(): print('登录失败' ) return False ticket = None retry_times = 90 for _ in range (retry_times): ticket = self._get_QRcode_ticket() if ticket: break time.sleep(2 ) else : print('二维码扫描出错' ) return False if not self._validate_QRcode_ticket(ticket): print('二维码登录失败' ) return False else : print('二维码登录成功' ) self.nick_name = self.get_user_info() self._save_cookies() self.is_login = True return True def get_user_info (self ): """获取用户信息 :return: 用户名 """ url = 'https://passport.jd.com/user/petName/getUserInfoForMiniJd.action' payload = { 'callback' : 'jQuery{}' .format (random.randint(1000000 , 9999999 )), '_' : str (int (time.time() * 1000 )), } headers = { 'User-Agent' : self.User_Agent, 'Referer' : 'https://order.jd.com/center/list.action' , } try : resp = self.sess.get(url=url, params=payload, headers=headers) begin = resp.text.find('{' ) end = resp.text.rfind('}' ) + 1 resp_json = json.loads(resp.text[begin:end]) return resp_json.get('nickName' ) or 'jd' except Exception: return 'jd' def _validate_QRcode_ticket (self, ticket ): url = 'https://passport.jd.com/uc/qrCodeTicketValidation' headers = { 'User-Agent' : self.User_Agent, 'Referer' : 'https://passport.jd.com/uc/login?ltype=logout' , } resp = self.sess.get(url=url, headers=headers, params={'t' : ticket}) if resp.status_code !=requests.codes.OK: return False resp_json = json.loads(resp.text) if resp_json['returnCode' ] == 0 : return True else : print(resp_json) return False def _get_QRcode_ticket (self ): url = 'https://qr.m.jd.com/check' payload = { 'appid' : '133' , 'callback' : 'jQuery{}' .format (random.randint(1000000 , 9999999 )), 'token' : self.sess.cookies.get('wlfstk_smdl' ), '_' : str (int (time.time() * 1000 )), } headers = { 'User-Agent' : self.User_Agent, 'Referer' : 'https://passport.jd.com/new/login.aspx' , } resp = self.sess.get(url=url, headers=headers, params=payload) if resp.status_code !=requests.codes.OK: print('获取二维码扫描结果出错' ) return False begin = resp.text.find('{' ) end = resp.text.rfind('}' ) + 1 resp_json = json.loads(resp.text[begin:end]) if resp_json['code' ] != 200 : print('Code: {0}, Message: {1}' .format (resp_json['code' ], resp_json['msg' ])) return None else : print('已完成手机客户端确认' ) return resp_json['ticket' ]

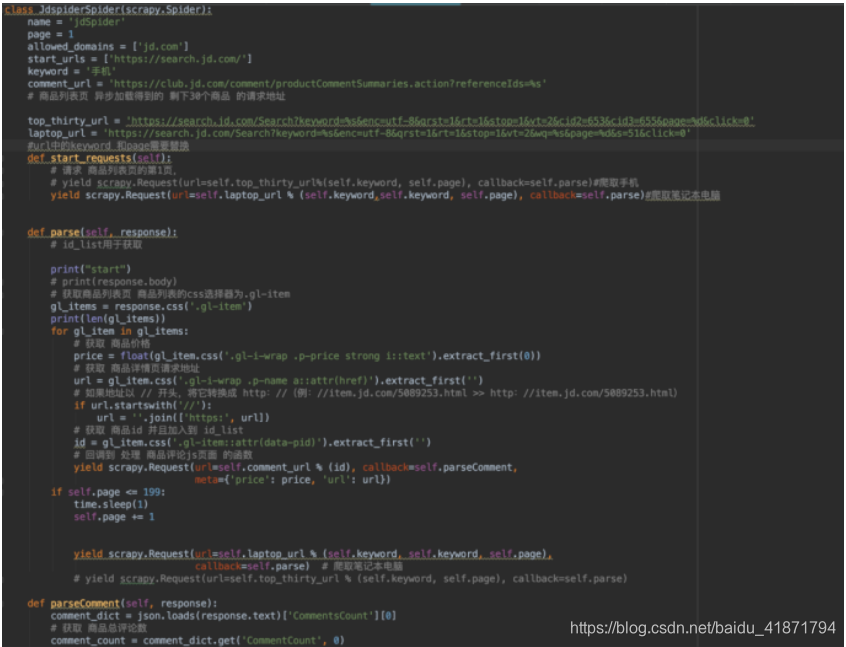

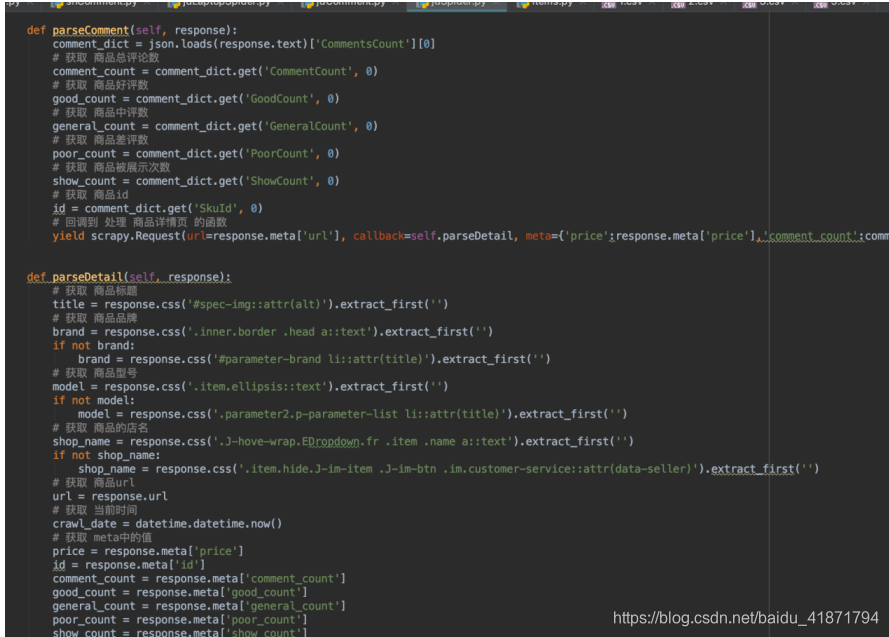

(2)京东商品信息的代码,使用scrapy框架,只贴出有关爬虫逻辑的重要部分

import urllib.requestimport jsonimport timeimport randomimport pymysql as pymysqlfrom random import choiceimport sys''' 爬取京东的评论 由于可以找到对应的接口 所以没有使用scrapy 解析json即可 ''' DEFAULT_REQUEST_HEADERS = { 'Accept' : ' */*' ,'Accept-Encoding' : 'gzip, deflate, br' ,'Accept-Language' : 'zh-CN,zh;q=0.9' ,'User-Agent' : 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.122 Safari/537.36' ,'cookie' :'__jdu=15806122687801051175906; shshshfpa=d7d62fe8-302d-0eef-66ba-ff2b34d256a6-1580613199; shshshfpb=ptpp5JVNIFs%2FOKJmShsleow%3D%3D; pinId=0j9tP6mmaSFNEgXcNiCsZrV9-x-f3wj7; pin=jd_45a3a96f0dce8; unick=jd_45a3a96f0dce8; _tp=1S2A2FEea%2BptNQ%2BXOUNIjyaAn1cq2%2BrRzUshb6mUkKg%3D; _pst=jd_45a3a96f0dce8; user-key=fee67ccf-58e4-4adb-b458-3ee0ba2b47d3; ipLocation=%u5c71%u897f; unpl=V2_ZzNtbUdQQhQiChQALxkMUmILFVVLUEEVdlwRXHkfWVIzChtZclRCFnQUR1dnGlsUZwsZX0RcQRxFCEVkeBlUDWAAFVREZ3Mldgh2VUsZWAxmBBJeQVBKE3wJRlV%2fGVwDYwsTWnJnRBV8OHYGJkEOXyVHTW1KX0YQcThHZHsRXAJnChBYRlZzXhsJC1R%2fEF0CZwARWktRShR1CUJUex9YDWYEIlxyVA%3d%3d; __jdv=76161171|www.taobap.com|t_219962687_|tuiguang|4600f3cee1af496986312ef9374fe885|1582717059275; areaId=6; ipLoc-djd=6-303-36783-0; PCSYCityID=CN_140000_140100_140109; TrackID=1APbutj1lK16uXpxdIo35ZLUlCVHS37YbWy4ACoGrq_YbCTbZ_OHR55MDDThDq3N0RBQz6SIJ6L-T_UfLk-ZlCyfeDrwfoErqSMFSrY61r5inA8_5X2CIktUW9ynUz0RJ; cn=1; 3AB9D23F7A4B3C9B=HF4B3KEULPAGQU5ZK3A7U6ZLTA3GWQ2HEMSSHRFEKDYSXUGJSTH7DF7POYHZSCHJ465QTNAB4GWQAGAXPBDOQVHKUM; shshshfp=d5fee5b234e13324bd45d9b023635ae4; __jda=122270672.15806122687801051175906.1580612269.1582972811.1582975798.28; __jdc=122270672; shshshsID=9ebd788cef6e998f5d7fb9ae641dad31_2_1582975799167; SL_GWPT_Show_Hide_tmp=1; SL_wptGlobTipTmp=1; __jdb=122270672.2.15806122687801051175906|28.1582975798' ,'Cache-Control' : 'no-cache' ,'Connection' : 'keep-alive' ,'Host' : 'club.jd.com' ,'Pragma' :' no-cache' ,'upgrade-insecure-requests' : '1' ,'Sec-Fetch-Dest' : 'script' ,'Sec-Fetch-Mode' : 'no-cors' ,'Sec-Fetch-Site' : 'same-site' } ''' ''' fw = open ('result3.csv' ,'w' ) def crawlProductComment (url, skuid ): try : html = urllib.request.urlopen(url=url).read().decode('gbk' , 'ignore' ) except Exception: return -1 data = json.loads(html) if len (data['comments' ]) == 0 : return -1 for comment in data['comments' ]: product_name = comment['referenceName' ] comment_time = comment['creationTime' ] content = comment['content' ] replyCount = comment['replyCount' ] print(product_name) print(comment_time) print(content) fw.write('{},{},{},{}' .format (product_name.replace('\n' ,'' ),comment_time.replace('\n' ,'' ),content.replace('\n' ,'' ),replyCount)) fw.write('\n' ) connection = pymysql.connect(host="127.0.0.1" , user="root" , passwd="SSwns304275" , db="JDP" , port=3306 , charset="utf8" ) try : with connection.cursor() as cursor: sql = "insert into comment(commodity_id,commodity_name,comment_time,replyCount,content) values (%s,%s,%s,%s,%s)" cursor.execute(sql, (skuid, product_name, comment_time,replyCount, content)) connection.commit() finally : connection.close() return 1 def crawl_main (skuid ): i = 0 retry_times = 6 while True : url = 'https://club.jd.com' + '/comment/productPageComments.action' \ '?productId=' + str (skuid) + \ '&score=0' \ '&sortType=6' \ '&page=' + str (i) + \ '&pageSize=10' \ '&isShadowSku=0' \ '&fold=1' i = i + 1 print(url) if crawlProductComment(url, skuid) == -1 : retry_times = retry_times - 1 if retry_times < 0 : break i = i - 1 randint = random.randint(3 , 10 ) while randint > 0 : print("\r" , "{}th retrying in {} seconds..." .format (6 - retry_times, randint), end='' , flush=True ) randint -= 1 else : retry_times = 2 print("\r" , "{}th page has been saved." .format (i), end='' , flush=True ) if i == 20 : break fr = open ("jdUrl.csv" , "r" ) id_list = fr.readlines() list = id_list[0 ].split(',' );for i in list : crawl_main(i)

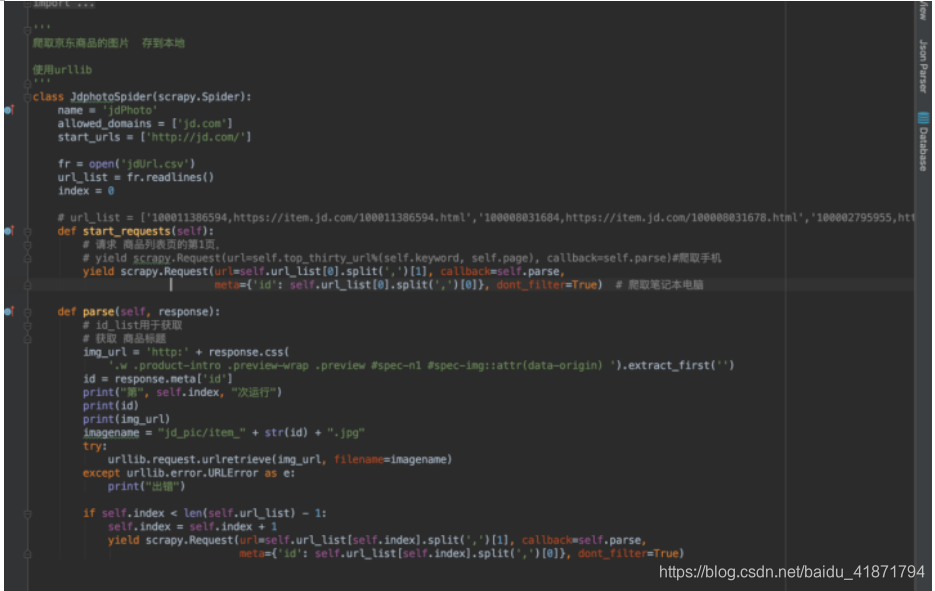

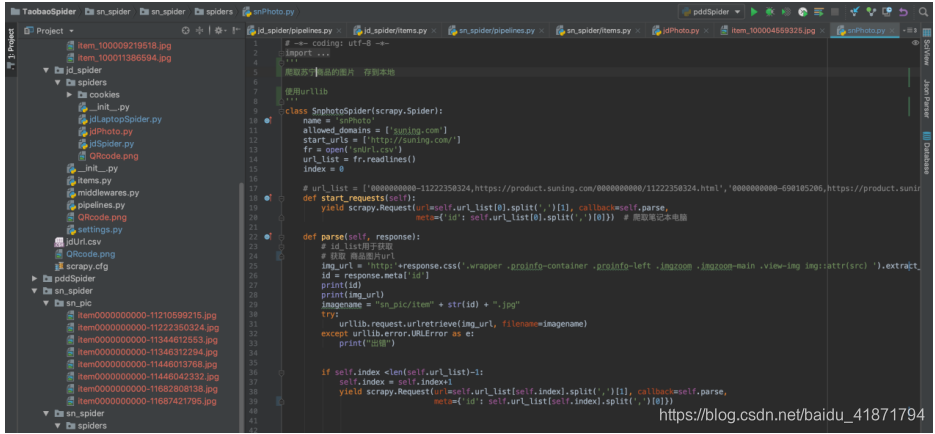

(4)京东获取图片的代码

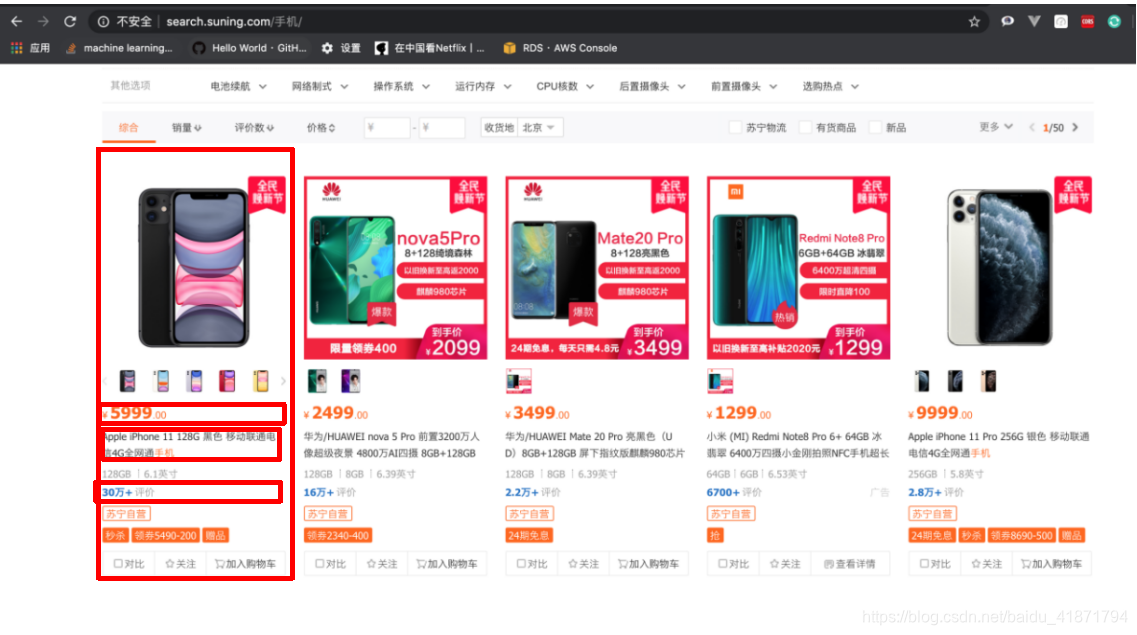

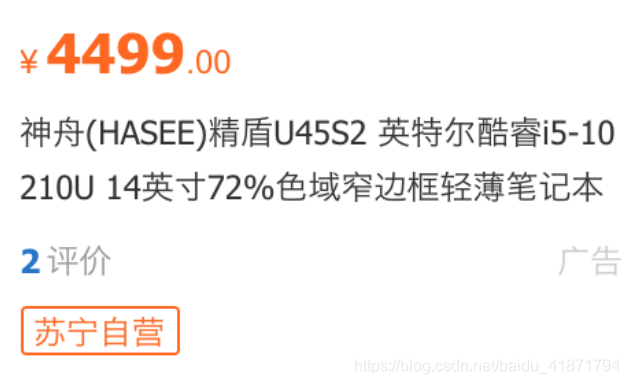

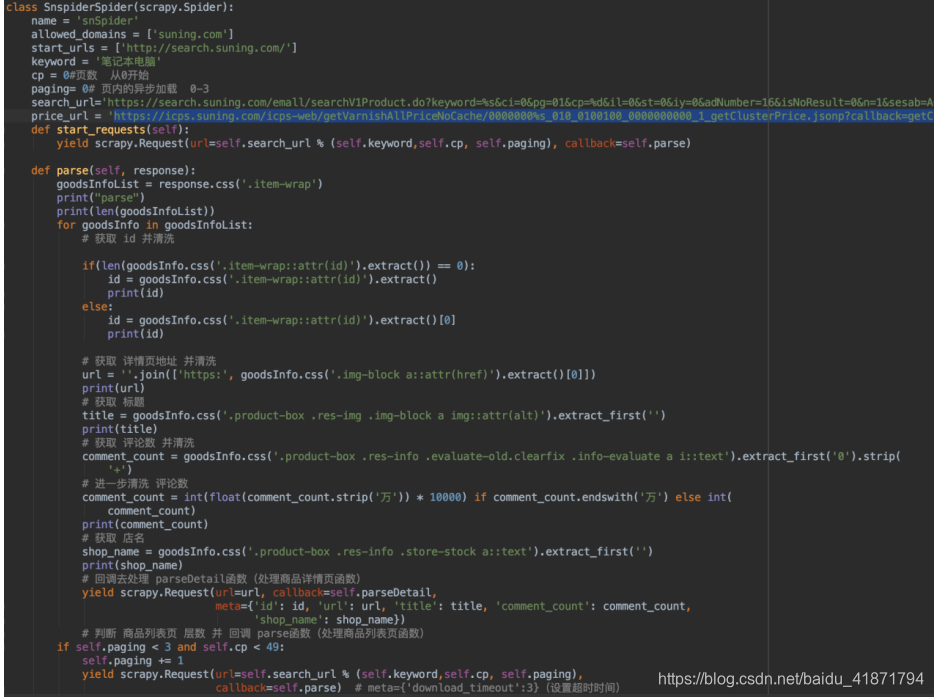

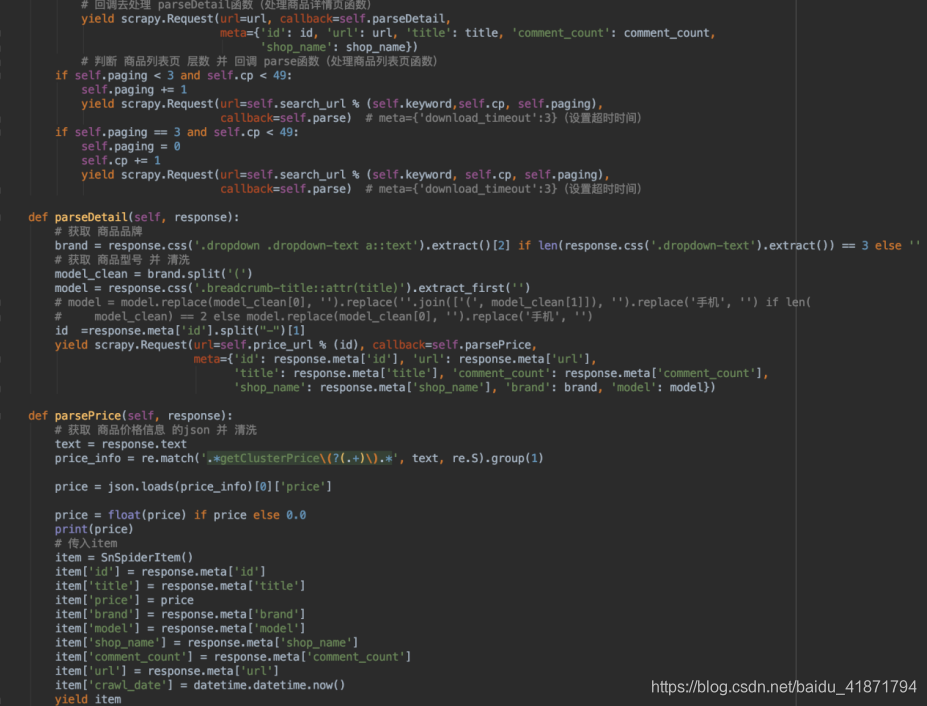

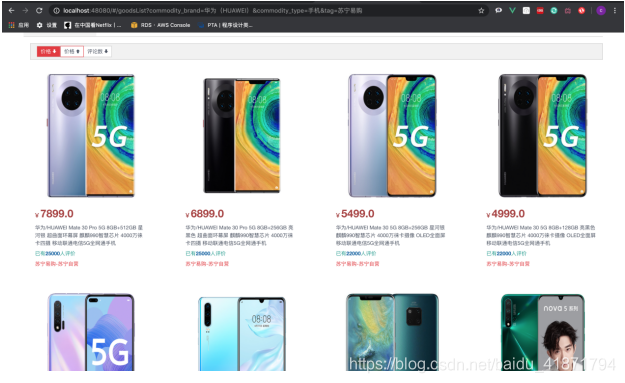

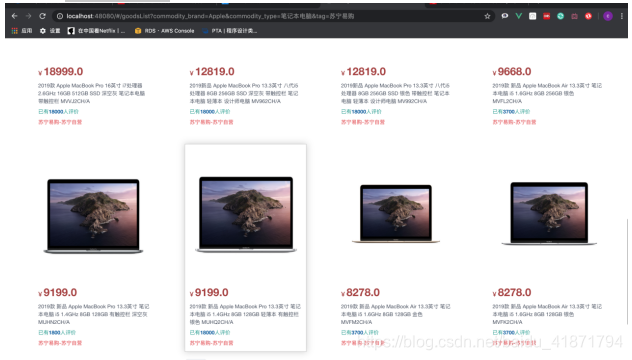

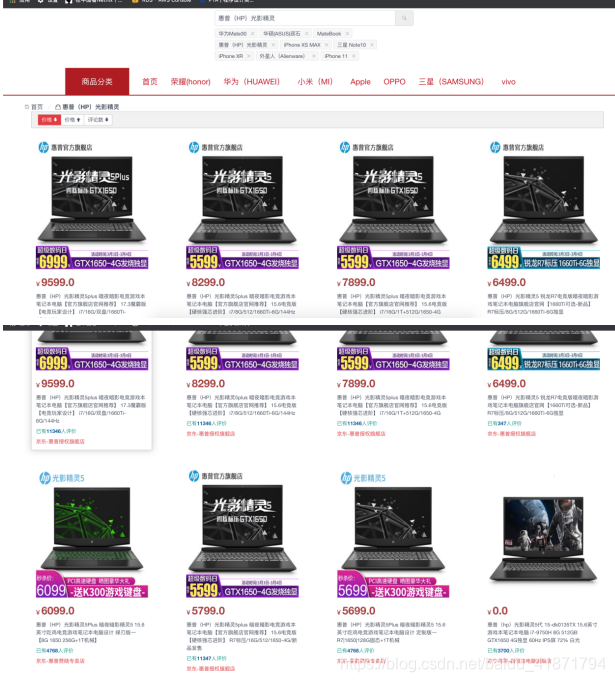

爬取苏宁易购的搜索界面,同样搜索关键词为“手机”和“笔记本电脑”的信息。目标站点的URL为:

https://search.suning.com/emall/searchV1Product.do?keyword={}&ci=0&pg=01&cp={}&il=0&st=0&iy=0&adNumber=16&isNoResult=0&n=1&sesab=ACAABAABCAAA&id=IDENTIFYING&cc=010&paging={}&sub=0&jzq=112901

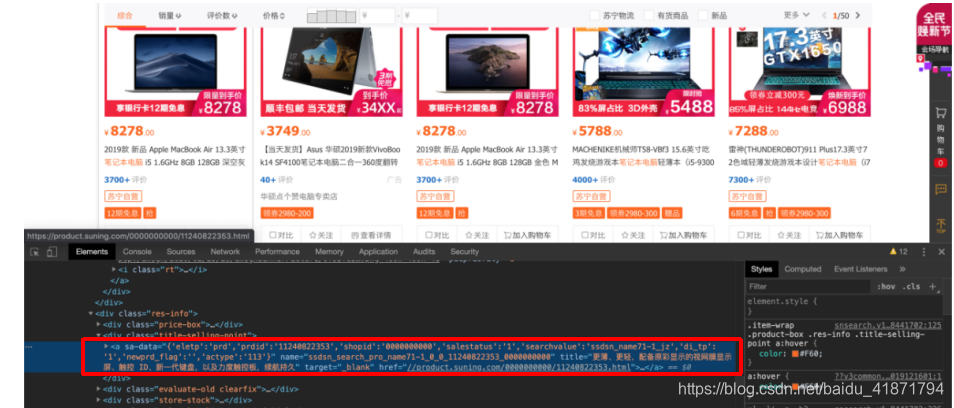

【1】苏宁网站记录分析

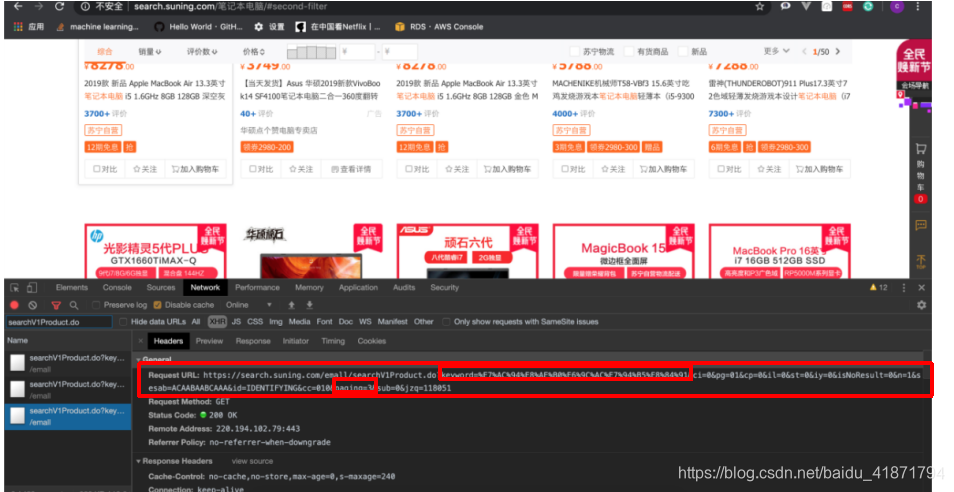

【2】操作过程

https://search.suning.com/emall/searchV1Product.do?keyword={}&ci=0&pg=01&cp={}&il=0&st=0&iy=0&adNumber=16&isNoResult=0&n=1&sesab=ACAABAABCAAA&id=IDENTIFYING&cc=010&paging={}&sub=0&jzq=112901

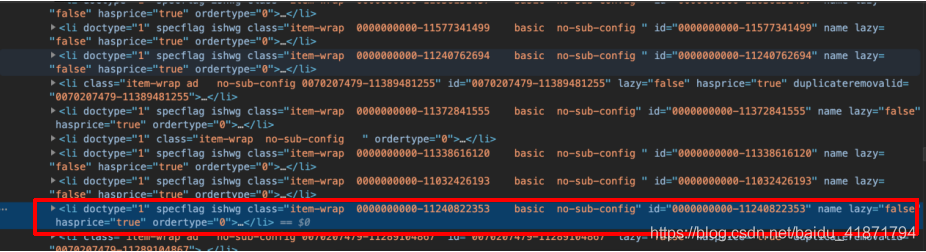

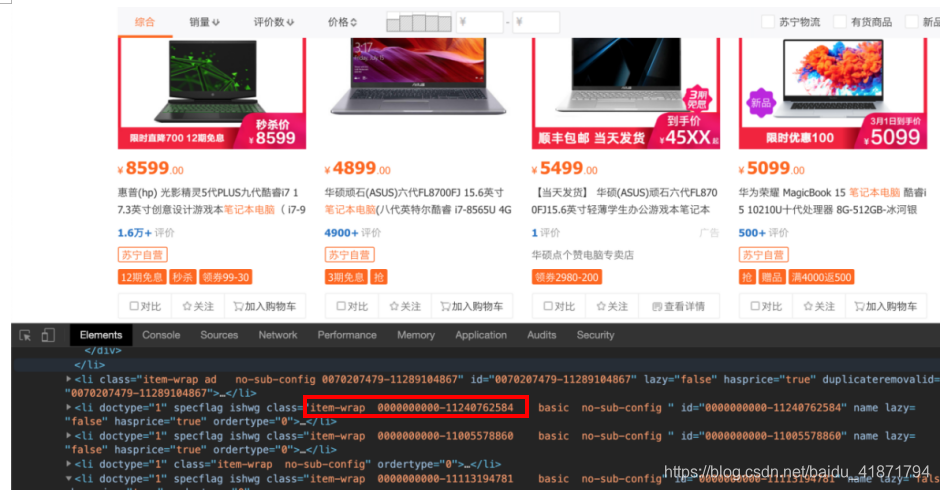

每页分为4层,直接请求 商品列表页地址 的话,只会显示第一层,其余 3层 是用户下拉后 异步加载出来的,使用抓包的方法可以截取到,所以每一页都分为 4步来分别请求

.product-box .res-img .img-block a::attr(href)

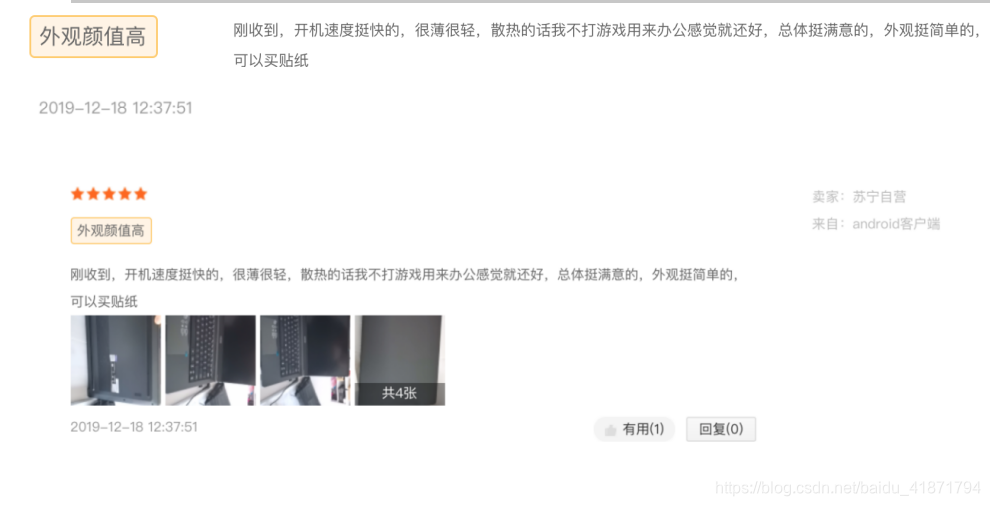

.product-box .res-info .evaluate-old.clearfix .info-evaluate a

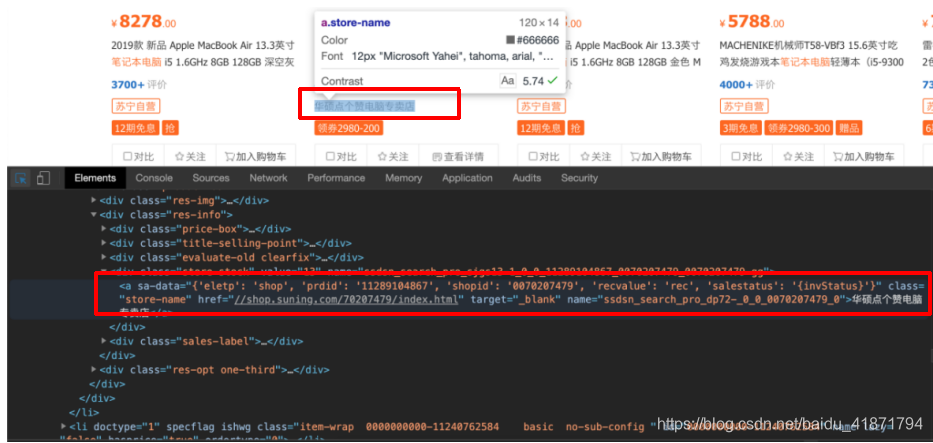

.product-box .res-info .store-stock a::text

.product-box .res-img .img-block a img::attr(alt)

对于商品价格的爬取,本来以为是界面上的,经过分析发现,商品的价格是异步加载的。

https://icps.suning.com/icps-web/getVarnishAllPriceNoCache/0000000{id}_010_0100100_0000000000_1_getClusterPrice.jsonp?callback=getClusterPrice

商品的id

商品的品牌

.dropdown .dropdown-text a::text

.breadcrumb-title::attr(title)

https://icps.suning.com/icps-web/getVarnishAllPriceNoCache/0000000%s_010_0100100_0000000000_1_getClusterPrice.jsonp?callback=getClusterPrice

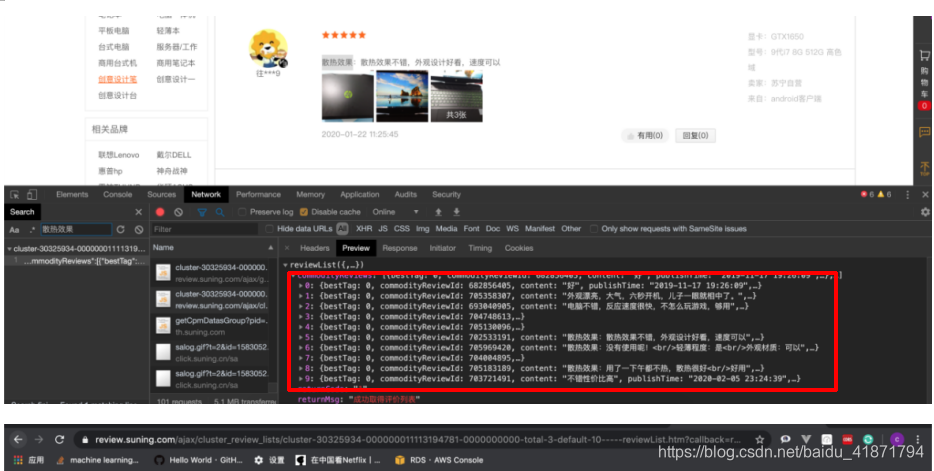

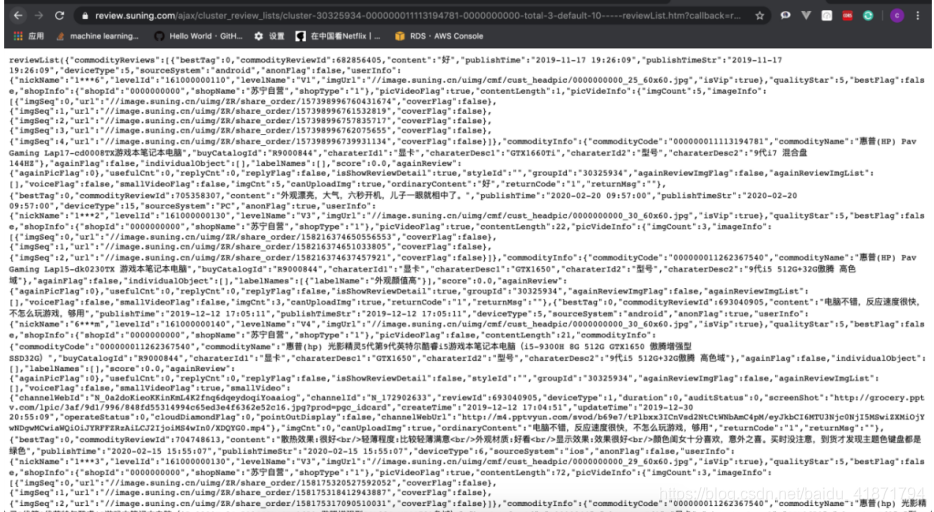

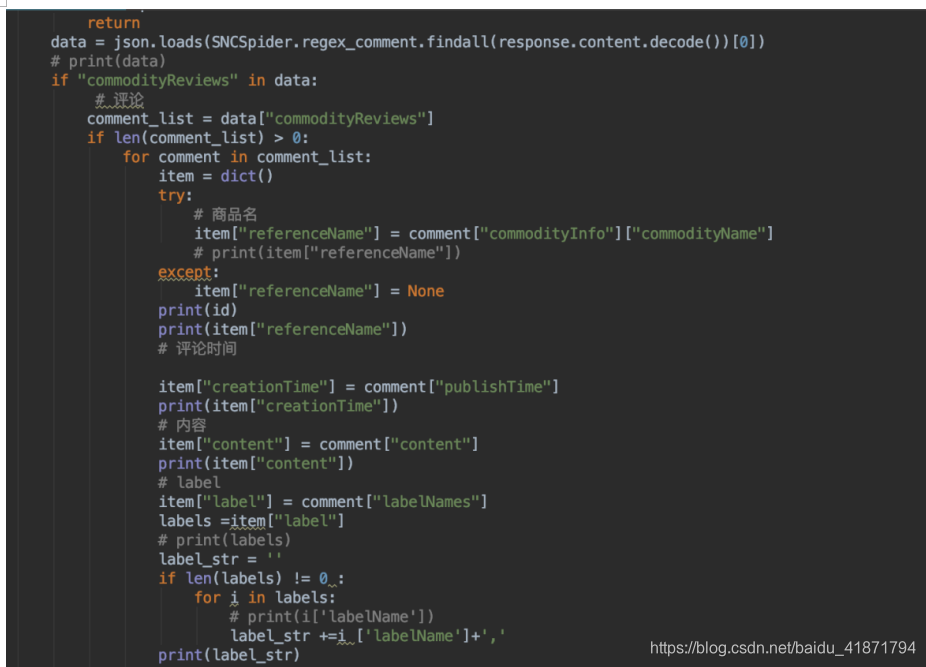

https://review.suning.com/ajax/cluster_review_lists/general-00000000-{}-0000000000-total-{}-default-10-----reviewList.htm

【3】数据库表的设计https://github.com/ccclll777/JDSNCompare

https://icps.suning.com/icps-web/getVarnishAllPriceNoCache/0000000{id}_010_0100100_0000000000_1_getClusterPrice.jsonp?callback=getClusterPrice

【0】项目技术栈http://39.105.44.114:38888/comparePrice/index.html